In our opinion, the best solution by far is to use rackmount GPU cluster nodes where you can get 10Gbit or Infiniband connectivity and have the fans spin at 100% 24/7 without disturbing anybody.

Unfortunately, there is one complication: When people first started using GPUs for computing the power and cooling requirements were a big

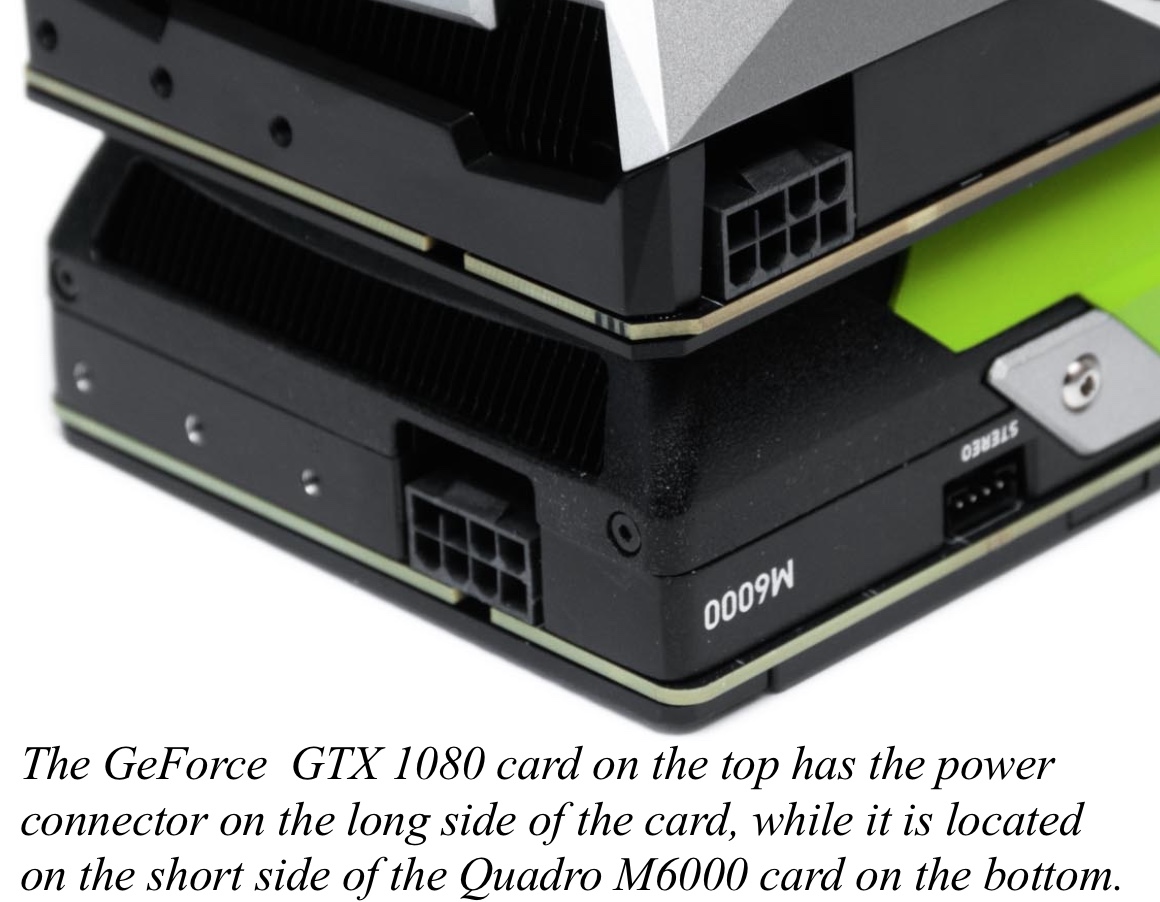

challenge for vendors. To optimize things, NVIDIA (and AMD, Intel) have created special passively cooled cards for servers, i.e. the GPU is cooled by the server’s fans instead of its own. To save space, the professional Tesla (as well as Quadro) cards also have their power connector on the short side of the card, and most vendors have used this. In other words, in most cluster nodes there is no space for a power connector placed on the side of the card – which means consumer cards will not fit physically in most rackmounted servers.

There are a couple of ways out of this. First, you can get workstations (e.g. Dell T630 or Supermicro SYS-7048GR-TR) that can be turned into 4U rackmounted servers. These will work great, but might take a bit of extra space in your racks. Second, some vendors might be able to sell you servers with extra-tall lids, which effectively turns a 4U server into a 4.5U server so you can fit the GeForce power connectors. This is most common for 8-way GPU systems, and since RELION-2 still does some things in the CPU we still prefer quad-GPU systems in terms of value for money and versatility.

However… the nicest solution is that there are now a couple of vendors  that sell 1U rackmount nodes specifically designed to accommodate GeForce cards too. In particular, we love Supermicro’s SYS-1028GQ-TRT.

that sell 1U rackmount nodes specifically designed to accommodate GeForce cards too. In particular, we love Supermicro’s SYS-1028GQ-TRT.

You might not find a lot of information about this on vendor web pages, but that is mostly because neither Supermicro nor NVIDIA officially certify this combination (but they still works awesome together). The short story

- 1U rackmount server, 2kW power supply

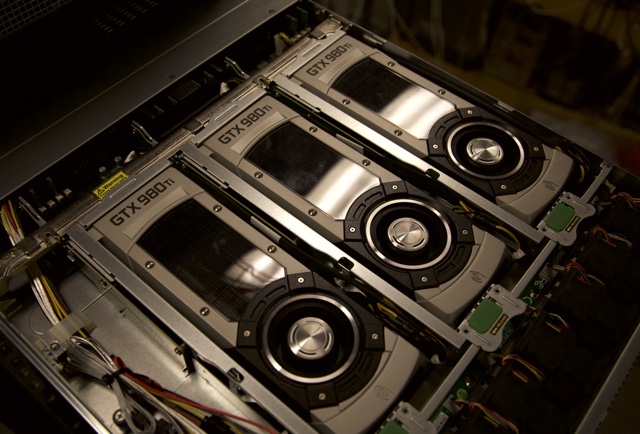

- Room four four GPUs; three in the front and a final one on the back.

- We got the version with 10Gbit ethernet built-in on the motherboard, and even with four GPUs there is room for one small PCIe card that you use e.g. for Infiniband.

- Drawback: There are only two small 2.5″ drives in the machine.

- There is room between the GPUs to fit power connectors on the side.

- You need to get PCIe power cables to fit the GPU you want to use. We went with 980Ti cables, since those cards need 8+6 pins. For the 1080 cards we only need 8 pins, but when a future 1080Ti appears we will probably need the extra six again.

To actually mount consumer cards in the SYS-1028GQ-TRT you will need to unscrew the mounting bracket (i.e., the metal piece around the display connectors). You won’t need it in the node anyway. We actually removed the backplates too, but that is probably not necessary.

There is a whole row of high-power cooling fans just in front of the GPUs. At some point we intent to test if we get even better cooling by disassembling the GPUs to disable their built-in fan, but for now this is good enough!

Even with the fast double 10Gbit connection to our file server, we decided to add a small SSD for caching. Here’s the setup we got for roughly $4000 per node (may 2016, GPU cost not included):

- Supermicro SYS-1028GQ-TRT, i.e. the built-in 10Gbit ethernet option

- Double Xeon E5-2620v4 CPUs (8 cores each).

- 128GB DDR4 memory

- Cables for 980Ti GPUs

- A small 32GB Supermicro SuperDOM to boot the OS (disk-on-module, essentially a small SSD that you plug directly into the motherboard so you don’t waste one of the only two 2.5″ SATA bays).

- A separate 512GB Samsung 850Pro SSD for caching.

It might seem stupid to use two separate SSDs. However, in particular when we use SSDs for caching and write several terabytes per day even the mid-range/semi-professional ones can wear out. By putting the OS on a separate small module we will almost never write to that disk, which means it will not wear out. If/when the cache-only disk wears out, you can just throw it away and push in a new one in a matter of minutes.

If you are not comfortable building things like this yourself, there are some vendors that are happy to sell you complete systems with consumer GPUs where they have already burnt in the GPUs for you. Since RELION-GPU is still very new you can look for systems intended for molecular dynamics, e.g. from Exxact that have been catering to this market for years.

Update 2016-10-21: When we first wrote this page, the only cards on the market were the “Founder’s edition” models. These are still fine, but by now they have mostly been replaced with vendor models, and can be hard to get. We’ve been quite happy with the even-cheaper ASUS TURBO-GTX1080 ($650) or ASUS TURBO-GTX1070 ($395). However, here we would recommend to be a bit careful! A lot of vendors (including ASUS – avoid their “dual” models) offer overclocked cards that provide even better performance. That can work great when you only put a single card in the machine, but they achieve improved cooling by using bulkier cooling solutions and by blowing the air transversally instead of longitudinally over the card. For a cluster node where air can only flow longitudinally, this would be a complete disaster! You can identify these cards with name suffixes such as “OC”, that they have several fans, or by the direction of the fins on the coolers. For a cluster node it is not just a matter of risk – there is no way overclocked cards with transversal air flow will survive long term.